load("./data/interim/CB619.RData")Appendix B — Probability aggregation

At the core of GeoPressure, we try to estimate the position of the bird based on a pressure mismatch of the geolocator and a weather reanalysis dataset ERA5. A major challenge in this process is the transformation of map of errors (mismatch) into a probability.

In this chapter, we present this challenge, look at the theory behind it, and explore possible solutions using Great Reed Warbler (18IC) as an example.

B.1 Problem presentation

For each stationary period, we estimate the probability of the position \(\textbf{x}\) of the bird based on a pressure timeseries measured by the geolocator \(P_{gl}[t] \quad \forall t \in [1,\ldots,n]\), which, in a Bayesian framework can be written as, \[p(\textbf{x}\mid P_{gl}[1],\ldots,P_{gl}[n]) \propto p(P_{gl}[1],\ldots,P_{gl}[n] \mid \textbf{x}) p(\textbf{x}).\]

We are interested here in determining the likelihood term \(p(P_{gl} \mid \textbf{x})\) which represents the probability of observing the timeseries \(P_{gl}\) knowing that the bird is at location \(\textbf{x}\). To quantify this probability, we look at the mismatch between \(P_{gl}\) and the pressure timeseries of the ERA5 database \(P_{ERA5}(\textbf{x})\) extracted at location \(\textbf{x}\). We can reformulate the likelihood as a probability function \(f\) of an error term \(\varepsilon(\textbf{x})\) which measures a distance between \(P_{gl}\) and \(P_{ERA5}(\textbf{x})\) \[p(P_{gl} \mid \textbf{x}) = f(\varepsilon[1],\ldots,\varepsilon[n])\]

This formulation of the problem helps us to split our problem in two: first defining an error term \(\varepsilon[t]\) and secondly defining the likelihood function \(f\).

B.1.1 Error term

In most cases, we would expect to measure the error term with a simple difference \(P_{ERA5}(\textbf{x})[t]-P_{gl}[t].\) However, within a ERA5 grid cell of 9-30km, we can expect a wide range of altitude at which the bird can be located. As such any offset between the two timeseries might be due to nothing more than a difference of altitude.

To solve this issue, we remove the mean pressure difference, essentially ignoring the absolute value of pressure (and altitude) such that the error term only quantify the mismatch of the temporal variation, \[\varepsilon[t] = \left( P_{ERA5}(\textbf{x})[t]-P_{gl}[t]\right) - \left( \frac{1}{n}\sum_{i=1}^{n} P_{ERA5}(\textbf{x})[i]-P_{gl}[i] \right) .\]

This way of building the error term has some important consequences. A timeseries of only one datapoint always yields zero error at all locations, resulting in an equally probable map. As the number of datapoints increases, the error term becomes more and more able to distinguish between “good” and “bad” locations.

B.1.2 Error term for the Great Reed Warbler

Let’s load the data and filter

We are using the GeoPressure API with the geopressure_map_mismatch() function to measure the mismatch of the pressure time series. Because the API can realistically return only a single map per stationary period, it aggregates the error time series with the Mean Squared Error (MSE), \[\mathit{MSE} = \frac{1}{n}\sum_{t=1}^{n} \varepsilon[t]^2.\]

The MSE shows where the match of pressure is the best, excluding open water (ERA5-LAND does not support water). tag$stap also contains a column nb_sample which indicates the number of datapoints used to compute the MSE \(n\). This value can only be up to the value max_sample.

plot(tag, type = "map_pressure_mse")| stap_id | start | end | known_lat | known_lon | include | nb_sample |

|---|---|---|---|---|---|---|

| 1 | 2021-07-01 02:30:10 | 2021-09-24 00:15:10 | 37.28681 | -82.30497 | TRUE | 0 |

| 2 | 2021-09-24 11:15:10 | 2021-09-24 23:45:10 | NA | NA | TRUE | 13 |

| 3 | 2021-09-25 10:45:10 | 2021-09-25 23:45:10 | NA | NA | TRUE | 14 |

| 4 | 2021-09-26 12:45:10 | 2021-09-27 04:15:10 | NA | NA | TRUE | 16 |

| 5 | 2021-09-27 08:15:10 | 2022-04-06 00:45:10 | NA | NA | TRUE | 100 |

| 6 | 2022-04-06 10:15:10 | 2022-04-07 00:15:10 | NA | NA | TRUE | 14 |

B.2 Gaussian likelihood function

In order to find an appropriate likelihood function, we first need to assume a distribution of the error. The sources of error are (1) the sensor measurement error, (2) the ERA5 reanalysis error and (3) the altitudinal movement of the bird during this time. Because we are removing the mean error, we can ignore any long-term errors (e.g., constant temporal error in ERA5 or biases in the geolocator sensor).

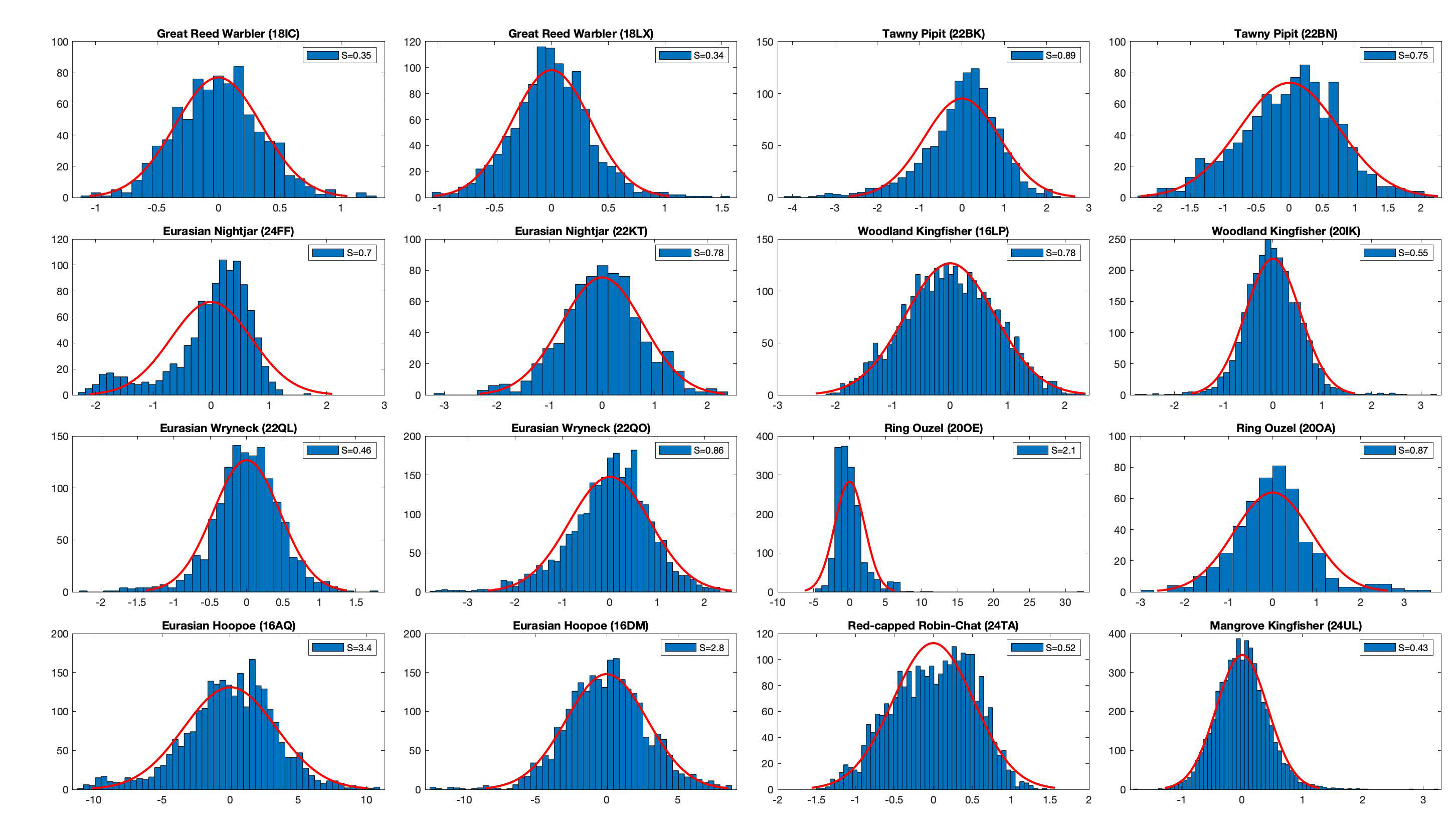

The figure below shows the error distribution at the known location of equipment and retrieval.

They all look close enough to a Gaussian distribution. In the case of the Great Reed Warbler, the standard deviation is around 0.5.

They all look close enough to a Gaussian distribution. In the case of the Great Reed Warbler, the standard deviation is around 0.5.

sd <- 0.5Therefore, assuming a Gaussian distribution of our error, the Gaussian likelihood of a multivariate normal distribution is given by \[ f(\boldsymbol{\varepsilon})={\frac {1}{(2\pi)^{n/2} \sqrt{\det(\boldsymbol{\Sigma})}}}\exp \left(-\frac{1}{2} \boldsymbol{\varepsilon} \boldsymbol{\Sigma}^{-1} \boldsymbol{\varepsilon} \right).\] with the vector notation \(\boldsymbol{\varepsilon }=[\varepsilon[1],\ldots,\varepsilon[n]]\) and where the covariance matrix \(\boldsymbol{\Sigma}\) contains the variance of two datapoints \(\boldsymbol{\Sigma}_{t_1,t_2} = \operatorname {E}[ \varepsilon[t_1] \varepsilon[t_2] ]\).

B.2.1 Independence of errors

As the covariance is difficult to quantify explicitly, we can first look at the very strong assumption of independence of the error, \(\varepsilon_t \overset{i.i.d.}{\sim} \mathcal{N}(0,\sigma)\). In this case, the Gaussian likelihood function \(f_{ind}\) is simply the product of the normal probability density function of each error \(\varepsilon[t]\), \[ f_{ind}(\boldsymbol{\varepsilon})=\prod _{t=1}^{n}f_\mathcal{N}(\varepsilon[t])=\left({\frac {1}{2\pi \sigma ^{2}}}\right)^{n/2}\exp \left(-{\frac {\sum _{t=1}^{n}\varepsilon[t]^2}{2\sigma ^2}}\right).\]

We can re-write this equation as a function of the MSE \[ f_{ind}(\boldsymbol{\varepsilon})=\left({\frac {1}{2\pi \sigma ^{2}}}\right)^{n/2}\exp \left(-n{\frac {\mathit{MSE}}{2\sigma ^2}}\right).\]

Using this equation, we can compute the probability for each stationary periods. This function corresponds the one used in geopressure_map_likelihood() using the following parameters

tag_tmp <- geopressure_map_likelihood(

tag,

sd = sd,

log_linear_pooling_weight = \(n) 1

)

plot(tag_tmp, type = "map_pressure")Assuming independence, the Gaussian likelihood transforms the MSE into probability with narrow ranges of uncertainties. So narrow that for long stationary period (#7 and #9), the entire map is 0.

This comes from the multiplication of probability assumed in the independence case (see equation above). The underlying assuming of the multiplication operator is the conjunction of probabilities, where aggregating two information is done with the AND operator: \(P(A~\text{and}~B) = P(A)\times P(B)\). Only the information content overlapping every datapoint of information is kept.

B.2.2 Quantification of the errors dependence

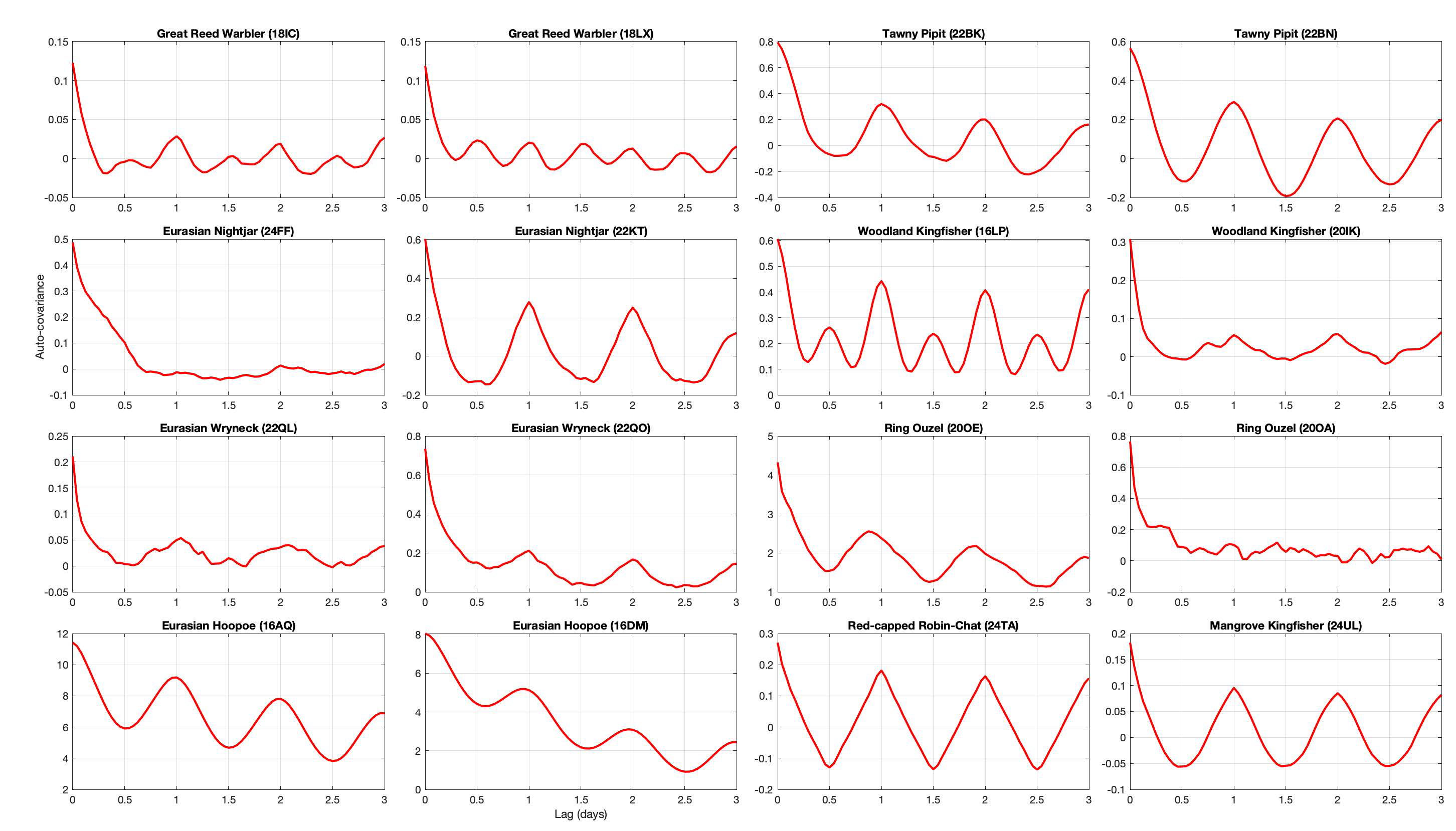

Plotting the auto-covariance at the calibration site for all the species allows us to see a temporal pattern in the error. For most birds, we can see a clear daily fluctuation which is certainly due to the bird’s daily movement for commute between feeding and roosting sites. The auto-covariance approaches 0 for all birds, which is expected as we removed the mean value. However, the sill is reached between 6 and 12 hours depending on the bird (the hoopoe doesn’t reach it after 3 days!).

From this observation, one option would be to construct a covariance matrix based on the auto-covariance. While this would look like the cleanest way of doing it, we have two issues.

- The first one is the absence of stationarity. Indeed, the auto-covariance amplitude (and potentially shape) is strongly influenced by the vertical displacement of the bird, which is strongly correlated with topography. For instance, the Eurasian Hoopoe has a high variance and large temporal range because it breeds at the feet of the Alps. During the rest of the year, it lives in rather flat area. Therefore, the covariance built from the breeding site would not be appropriate for the other sites.

- The second issue has to do with the definition of our error term and the different duration of stationary period.

B.3 Probability aggregation

In the rest of this vignette, we take a different angle, re-framing the problem differently and exploring other options to compute the likelihood.

In the field of probability aggregation, the problem of combining sources of information with overlapping/redundant content is frame as finding a pooling operator \(F\) such that, \[p(\textbf{x}\mid \varepsilon[1],\ldots,\varepsilon[n]) \approx F(p(\textbf{x} \mid \varepsilon[1]) ,\ldots,p(\textbf{x} \mid \varepsilon[n])),\] which, with our Gaussian assumption, we can written, \[ F(f(\varepsilon[1]),\ldots,f(\varepsilon[n]))=F(\boldsymbol{\varepsilon})\]

B.3.1 Introduction to Log-linear pooling

The most popular aggregation function is the log-linear pooling,

\[F(\boldsymbol{\varepsilon}) \propto \prod_{t=1}^n f(\varepsilon[t])^{w_t}\]

This equation also relies on the principle of conjunction of probability, but it uses a weight \(w_t\) which is related to the new information brought by each additional \(\varepsilon[t]\). Formally, it can be defined by \[w_t=\frac{\ln p(\varepsilon[t] \mid \boldsymbol{x},\varepsilon[1],\ldots \varepsilon[t-1])}{\ln p(\varepsilon[t] \mid \boldsymbol{x})}.\] Allard, Comunian, and Renard (2012) is a great resource to learn more about probability aggregation and log linear pooling.

The log-linear pooling aggregation simplifies to the case of Gaussian independence when \(w_t=1\). We have already explored this case earlier.

In the more general case, using the Gaussian probability density function formula, we can write, \[f(\varepsilon[t])^{w_t} = \left({\frac {1}{2\pi \sigma ^{2}}}\right)^{w_t/2}\exp \left(-w_t{\frac {\varepsilon[t]^2}{2\sigma ^2}}\right).\]

As such, if we assume the weight to be constant \(w_t=w\), we can rewrite the pooling aggregator as \[F(\boldsymbol{\varepsilon}) \propto \left({\frac {1}{2\pi \sigma ^{2}}}\right)^{\frac{wn}{2}} \exp \left(-{\frac {w}{2\sigma ^2}}\sum _{t=1}^{n}\varepsilon[t]^2\right),\]

and even write it as a function of the MSE, \[F(\boldsymbol{\varepsilon}) \propto \left({\frac {1}{2\pi \sigma ^{2}}}\right)^{\frac{wn}{2}} \exp \left(-{\frac {wn}{2\sigma ^2}}\textit{MSE}\right).\]

B.3.2 Log-linear pooling, \(w=1/n\)

An interesting case is if \(w=1/n\), so that \(\sum_t w_t=1\). This would simplify the log-linear pooling aggregator to \[ F(\boldsymbol{\varepsilon})={\frac {1}{\sigma\sqrt{2\pi} }}\exp \left(-{\frac {1}{2\sigma ^2}\mathit{MSE}}\right),\] which can be interpreted as the Gaussian probability distributions of the MSE. The length of the timeseries \(n\) has disappeared from the equation, so that the aggregation depends only on the mean square of the errors, regardless of how many datapoints.

We can try this and see the influence on the probability map.

tag_tmp <- geopressure_map_likelihood(

tag,

sd = sd,

log_linear_pooling_weight = \(n) 1 / n

)

plot(tag_tmp, type = "map_pressure")As expected from the disappearance of n, the uncertainty is now completely independent from the duration of the stationary period.

This is again obviously not what we want, but it shows the other extreme of the log-linear pooling.

B.3.3 Log-linear pooling, \(w = \log(n)/n\)

For this study, we chose to use \(w=\frac{\log(n)}{n}\), which result in the pooling aggregation

\[F(\boldsymbol{\varepsilon}) \propto \left({\frac {1}{2\pi \sigma ^{2}}}\right)^{\frac{w\log(n)}{2}} \exp \left(-{\frac {\log(n)}{2\sigma ^2}}\textit{MSE}\right).\]

This scheme was designed to minimize the strength of \(1/n\)

B.3.4 Validation

Validation of uncertainty estimation is relatively difficult, especially when there are only a few datapoints and we assume that the behaviour of the bird might differ between calibration sites (equipment and retrieval) and the rest of its journey.

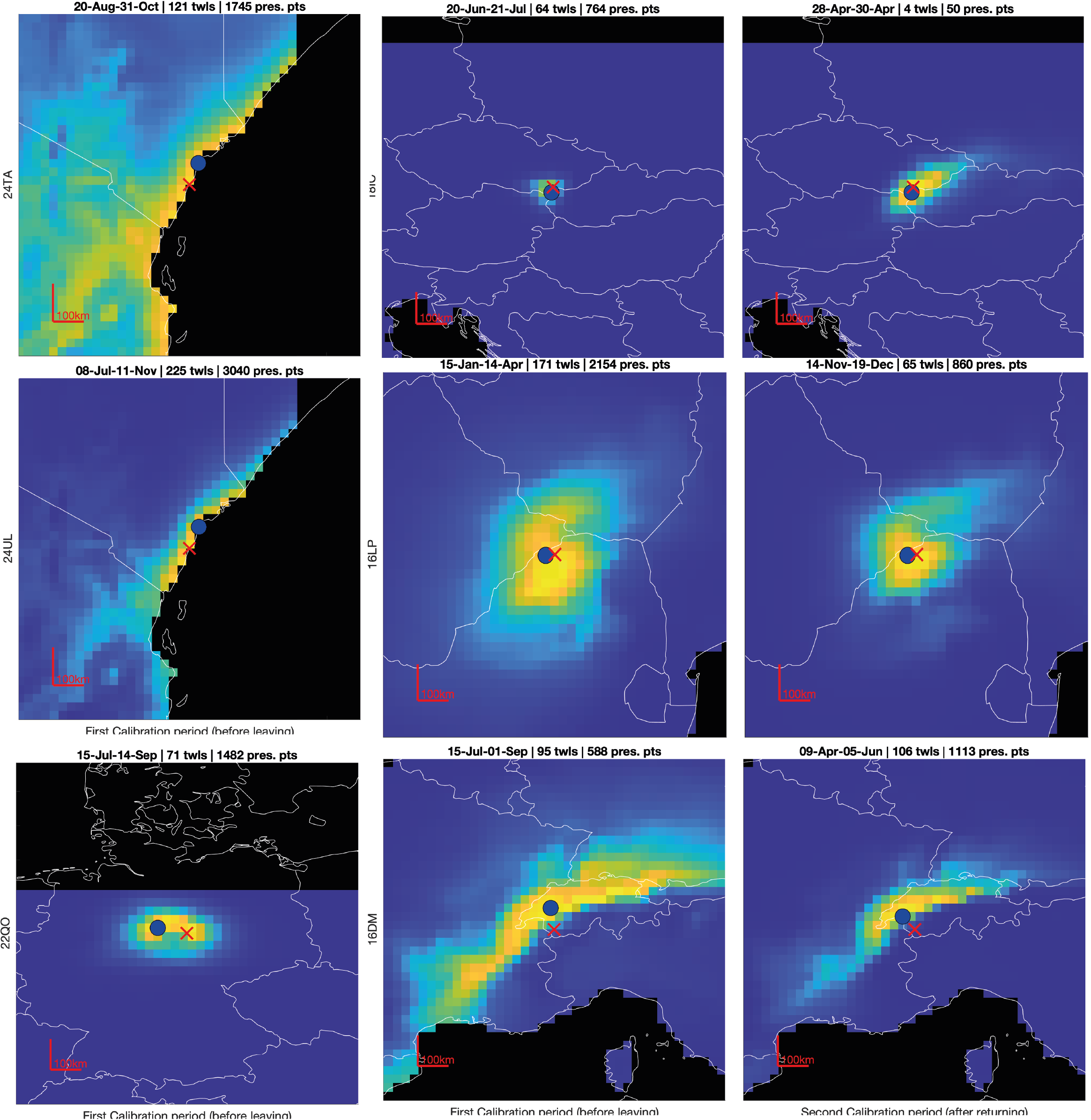

In the figure below, some examples of uncertainty estimate are shown. Ideally, we want the colored area to be small (low uncertainty), but when assessing an uncertainty, we also want the red cross to fall within the colored area. That is, if the red cross is too often outside, our estimator is too confident.

Furthermore, the shape of the uncertainty can be strongly anisotropic (e.g., hoopoe bottom right) making the distance between the most likely point (blue) and true value (red cross) a poor measure of uncertainty.

Assessing uncertainty relies on checking that that the red cross is distributed according to each uncertainty shape. A more formal way to quantifying the uncertainty is of the quantile of the true value \(q=p(\boldsymbol{x}\leq \boldsymbol{x}_{true})\), which corresponds to the probability that the variable is less than or equal to the true value. So, if the true value belongs to the distribution, the distribution of its quantile should be uniform.

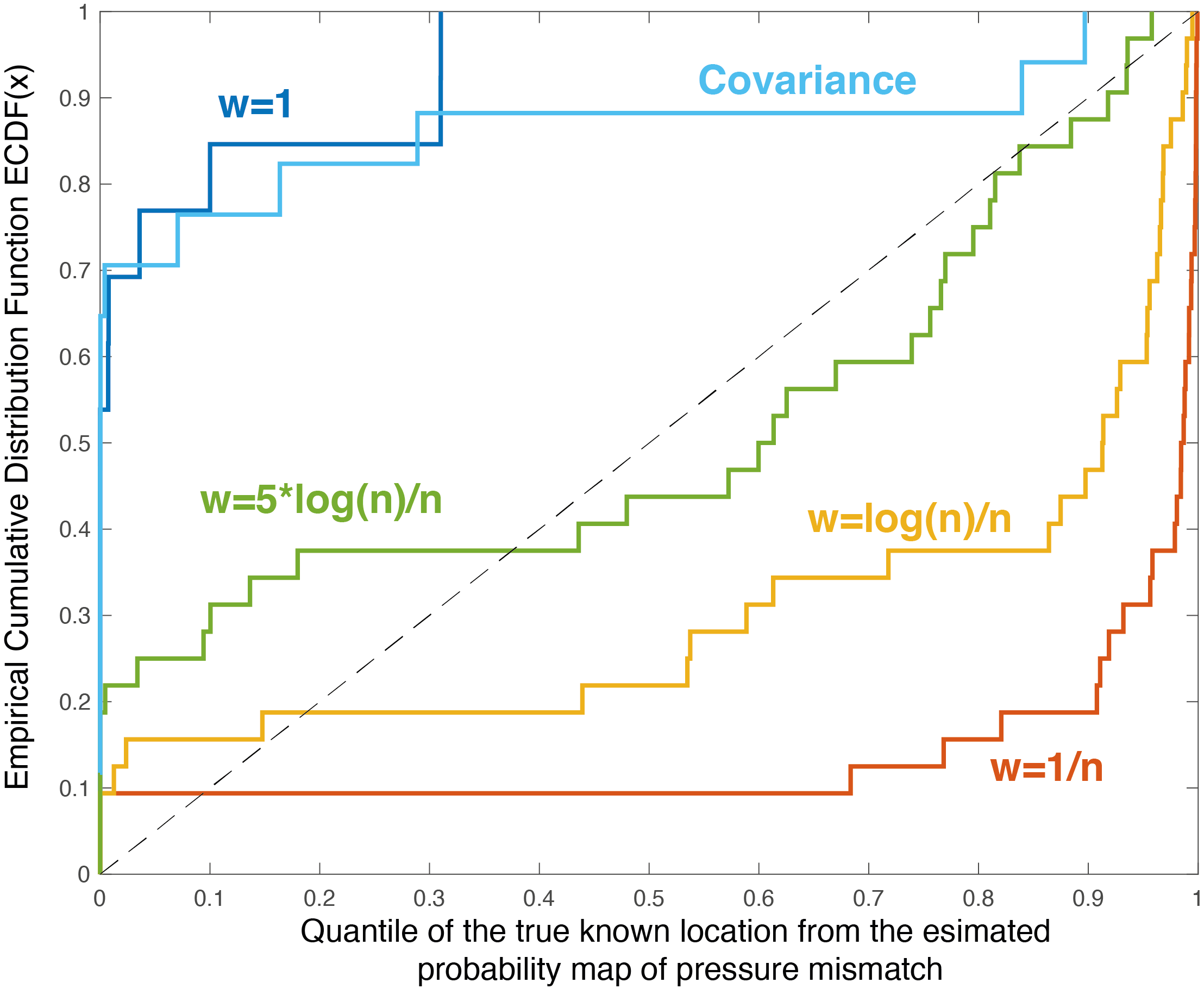

This can be visualised with the empirical cumulative distribution of the quantiles which should fall on the 1:1 line (like a qq-plot). The two extreme cases (\(w=1\) and \(w=1/n\)) show overconfidence (above the line) and underconfidence (below the line) respectively. Indeed, the distribution of quantile using the \(w=1/n\) method shows a lot of quantile values between 0.9-1, which indicates that the true value is within the 90% uncertainty contour (under-confident).

The method chosen (\(w=\log(n)/n\)) is doing better than both, but is still in general underconfident. Because the calibration the validation is performed with rather long temporal series (equipment and retrieval), one can expect that the labelling of pressure is better/easier than for other places. Thus, it seems more appropriate to be slightly underconfident while the method is being developed.

The covariance aggregation scheme was implemented by computing the covariance matrix of the multi-variate Gaussian distribution. The covariance function was build using the exact on the variogram of each species. This is, in theory, the more correct method, but it looks too overconfident. Thus we didn’t use it for now.

Looking forward, calibration is highly dependent on the local topography and the ability of the bird to move up and down. Manual edition/labelling has also a strong impact.